728x90

광고 한 번만 눌러주세요! 블로그 운영에 큰 힘이 됩니다 (Click my ADs)

파이썬을 하다보면 Scikit-learn에 있는 전처리 코드를 많이 사용하게 된다.

개별적으로 다 처리하거나 퉁쳐서 할 수도 있지만, 이것을 Pipeline을 이용해서 깔끔하게 Pipeline으로 구성할 수 있다!

해당 글에서는 수치형 변수와 범주형 변수를 나눠서 처리하면서 각각 전처리를 다른 방식으로 진행하고 바꾸는 것에 대해서 해본 것을 공유하려고 한다.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

plt.style.use('ggplot')

# load the dataset

from sklearn.base import BaseEstimator

from sklearn.base import TransformerMixin

from sklearn.preprocessing import StandardScaler , RobustScaler

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import FunctionTransformer

from sklearn.pipeline import Pipeline

from sklearn.pipeline import FeatureUnion

from sklearn.model_selection import train_test_split

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import KFold

from sklearn.model_selection import StratifiedKFold

from sklearn.model_selection import RandomizedSearchCV

from sklearn.metrics import make_scorer

from sklearn.metrics import accuracy_score

from sklearn.metrics import classification_report

from sklearn.metrics import confusion_matrix

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import BaggingClassifierincome = pd.read_csv("./../Data/income_evaluation.csv")

newcol = [i.strip() for i in income.columns.tolist()]

income.columns = newcol

income.info()

######### 변수 선택 Class #########

class FeatureSelector(BaseEstimator, TransformerMixin):

#Class Constructor

def __init__(self, feature_names):

self.feature_names = feature_names

#Return self nothing else to do here

def fit(self, X, y = None):

return self

#Method that describes what we need this transformer to do

def transform(self, X, y = None):

return X[self.feature_names]

######### 결측 대체 Class #########

class MissingTransformer( BaseEstimator, TransformerMixin ):

def __init__(self, MissingImputer):

self.MissingImputer = MissingImputer

#Return self nothing else to do here

def fit( self, X, y = None ):

return self

def transform(self, X , y = None ):

cols = X.columns.tolist()

df = X.copy()

result = self.MissingImputer.fit_transform(df)

result = pd.DataFrame(result , columns = cols )

return result

######### 연속형 변수 처리 Class #########

class NumericalTransformer( BaseEstimator, TransformerMixin ):

#Class constructor method that takes a boolean as its argument

def __init__(self, new_features=True):

self.new_features = new_features

#Return self nothing else to do here

def fit( self, X, y = None ):

return self

#Transformer method we wrote for this transformer

def transform(self, X , y = None ):

df = X.copy()

# convert columns to numerical

columns =df.columns.to_list()

for name in columns :

if name == "age" :

value = RobustScaler().fit_transform(df[name].values.reshape(-1,1))

else :

value = StandardScaler().fit_transform(df[name].values.reshape(-1,1))

df[name] = value

# returns numpy array

return df

######### 범주형 변수 처리 Class #########

# converts certain features to categorical

class CategoricalTransformer( BaseEstimator, TransformerMixin ):

#Class constructor method that takes a boolean as its argument

def __init__(self, new_features=True):

self.new_features = new_features

#Return self nothing else to do here

def fit( self, X, y = None ):

return self

#Transformer method we wrote for this transformer

def transform(self, X , y = None ):

df = X.copy()

if self.new_features:

# Treat ? workclass as unknown

df['workclass']= df['workclass'].replace('?','Unknown')

# Two many category level, convert just US and Non-US

df.loc[df['native-country']!=' United-States','native-country'] = 'non_usa'

df.loc[df['native-country']==' United-States','native-country'] = 'usa'

# convert columns to categorical

columns =df.columns.to_list()

for name in columns :

col = pd.Categorical(df[name])

df[name] = col.codes

# returns numpy array

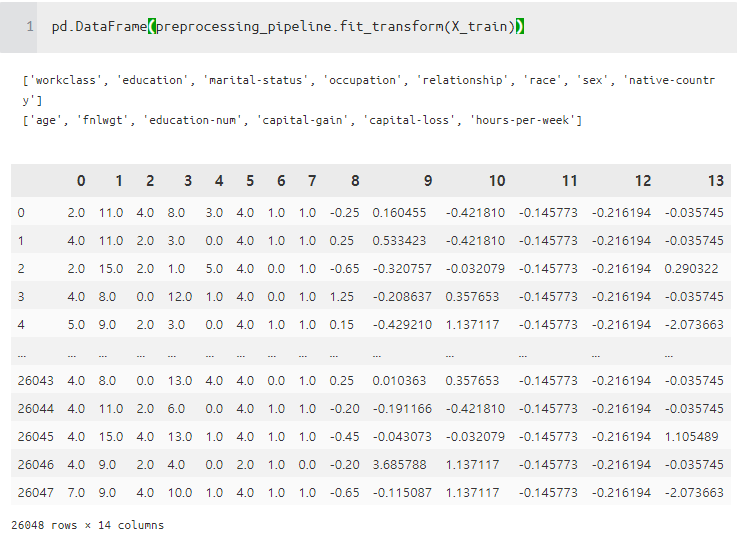

return df위에는 Class고 아래는 그것을 Pipeline을 실행

y = income.pop("income")

X = income

col = pd.Categorical(y)

y = pd.Series(col.codes)

seed = 108

X.loc[[0,1,2]] = np.nan

# get the categorical feature names

categorical_features = X.select_dtypes("object").columns.to_list()

# get the numerical feature names

numerical_features = X.select_dtypes("float").columns.to_list()

# create the steps for the categorical pipeline

######### PipeLine ############

categorical_steps = [

('cat_selector', FeatureSelector(categorical_features)),

('imputer', MissingTransformer(SimpleImputer(strategy='constant',

missing_values=np.nan,

fill_value='missing'))),

('cat_transformer', CategoricalTransformer())

]

# create the steps for the numerical pipeline

numerical_steps = [

('num_selector', FeatureSelector(numerical_features)),

('imputer', MissingTransformer(SimpleImputer(strategy='median'))),

('std_scaler', NumericalTransformer()),

]

# create the 2 pipelines with the respective steps

categorical_pipeline = Pipeline(categorical_steps)

numerical_pipeline = Pipeline(numerical_steps)

pipeline_list = [

('categorical_pipeline', categorical_pipeline),

('numerical_pipeline', numerical_pipeline)

]

# Combining the 2 pieplines horizontally into one full pipeline

preprocessing_pipeline =FeatureUnion(transformer_list=pipeline_list)

추후에 해당하는 변수 전처리 과정과 GridSearch까지 한 Pipeline에 한 코드를 공유하겠습니다.

참고한 자료

https://towardsdatascience.com/ensemble-learning-and-model-interpretability-a-case-study-95141d75a96c

Ensemble Learning case study: Model Interpretability

This is the first of a two-part article where we will be exploring the 1994 census income dataset, which contains information such as age…

towardsdatascience.com

'분석 Python > Data Preprocessing' 카테고리의 다른 글

| [변수 선택 및 생성]중요 변수 선택 및 파생 변수 만들기-2 (0) | 2020.01.09 |

|---|---|

| [변수 선택 및 생성]중요 변수 선택 및 파생 변수 만들기 (2) | 2020.01.08 |

| [ 변수 처리] 결측치 대체 알고리즘 MissForest Imputation 연습 (0) | 2019.12.11 |

| [변수 처리] 데이터에서 결측치 잘 만들어보기 (0) | 2019.09.17 |

| [변수 처리] Python에서 범주형 변수(Categorical) 다루기 (0) | 2019.09.13 |