PyTorch

An open source deep learning platform that provides a seamless path from research prototyping to production deployment.

pytorch.org

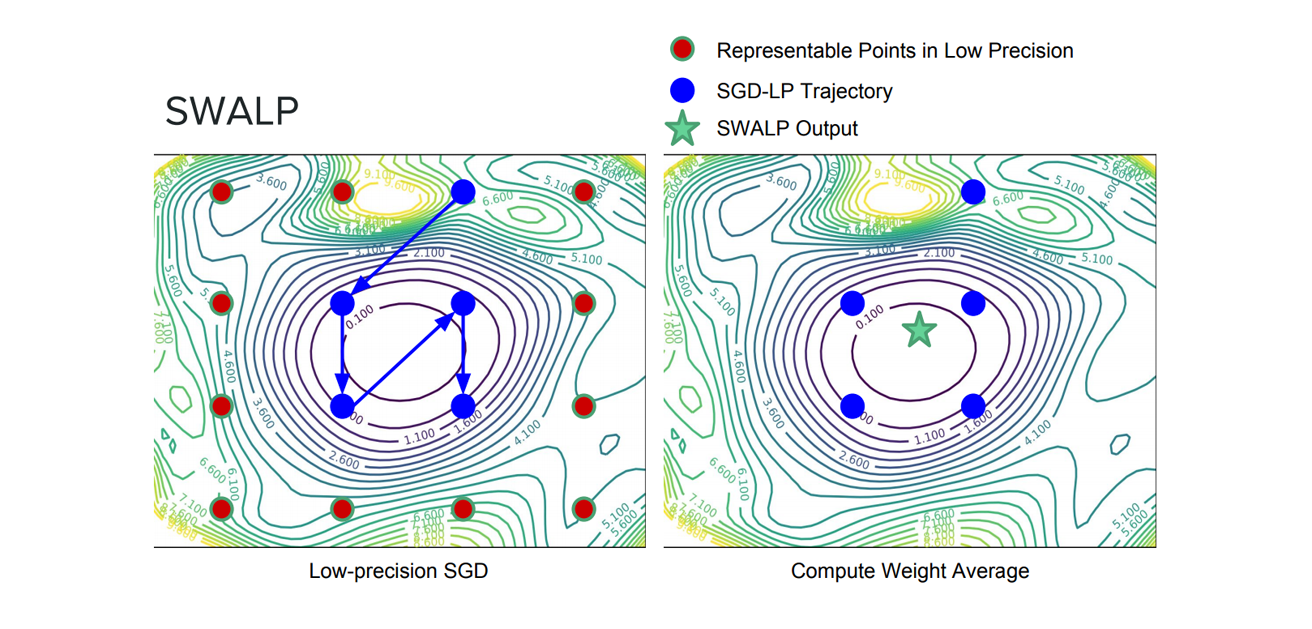

SWA가 지원된다는 것을 보고 뭐가 또 바뀌는지 보고자 확인해봤다.

from torch.optim.swa_utils import AveragedModel, SWALR

from torch.optim.lr_scheduler import CosineAnnealingLR

loader, optimizer, model, loss_fn = ...

swa_model = AveragedModel(model)

scheduler = CosineAnnealingLR(optimizer, T_max=100)

swa_start = 5

swa_scheduler = SWALR(optimizer, swa_lr=0.05)

for epoch in range(100):

for input, target in loader:

optimizer.zero_grad()

loss_fn(model(input), target).backward()

optimizer.step()

if epoch > swa_start:

swa_model.update_parameters(model)

swa_scheduler.step()

else:

scheduler.step()

# Update bn statistics for the swa_model at the end

torch.optim.swa_utils.update_bn(loader, swa_model)

# Use swa_model to make predictions on test data

preds = swa_model(test_input)

distributed data parallel (DDP) , remote procedure call (RPC) based distributed training를 중점적으로 업데이트를 했다고 한다.

이쪽은 잘 모르니 그렇다 하고 스윽 넘아가기로 하고 잘 아는 분이 있다면 댓글에 부탁드립니다!

- Automatic mixed precision (AMP) training is now natively supported and a stable feature (See here for more details) - thanks for NVIDIA’s contributions;

- Native TensorPipe support now added for tensor-aware, point-to-point communication primitives built specifically for machine learning;

- Added support for complex tensors to the frontend API surface;

- New profiling tools providing tensor-level memory consumption information;

- Numerous improvements and new features for both distributed data parallel (DDP) training and the remote procedural call (RPC) packages.

Performance & Profiling

제일 눈에 띈 것은 이거였다.

tensorflow에서 이것 때문에 반했는데, 역시 torch도 금방 바로 추가한다.

[BETA] FORK/JOIN PARALLELISM

import torch

from typing import List

def foo(x):

return torch.neg(x)

@torch.jit.script

def example(x):

futures = [torch.jit.fork(foo, x) for _ in range(100)]

results = [torch.jit.wait(future) for future in futures]

return torch.sum(torch.stack(results))

print(example(torch.ones([])))[BETA] MEMORY PROFILER

import torch

import torchvision.models as models

import torch.autograd.profiler as profiler

model = models.resnet18()

inputs = torch.randn(5, 3, 224, 224)

with profiler.profile(profile_memory=True, record_shapes=True) as prof:

model(inputs)

# NOTE: some columns were removed for brevity

print(prof.key_averages().table(sort_by="self_cpu_memory_usage", row_limit=10))

# --------------------------- --------------- --------------- ---------------

# Name CPU Mem Self CPU Mem Number of Calls

# --------------------------- --------------- --------------- ---------------

# empty 94.79 Mb 94.79 Mb 123

# resize_ 11.48 Mb 11.48 Mb 2

# addmm 19.53 Kb 19.53 Kb 1

# empty_strided 4 b 4 b 1

# conv2d 47.37 Mb 0 b 20

# --------------------------- --------------- --------------- ---------------

Distributed Training & RPC

[BETA] TENSORPIPE BACKEND FOR RPC

RPC 모듈을 위한 새로운 백엔드를 도입했다고 한다.

# One-line change needed to opt in

torch.distributed.rpc.init_rpc(

...

backend=torch.distributed.rpc.BackendType.TENSORPIPE,

)

# No changes to the rest of the RPC API

torch.distributed.rpc.rpc_sync(...)[BETA] DDP+RPC

PyTorch Distributed supports two powerful paradigms:

DDP for full sync data parallel training of models and the RPC framework which allows for distributed model parallelism. Previously, these two features worked independently and users couldn’t mix and match these to try out hybrid parallelism paradigms.

// On each trainer

remote_emb = create_emb(on="ps", ...)

ddp_model = DDP(dense_model)

for data in batch:

with torch.distributed.autograd.context():

res = remote_emb(data)

loss = ddp_model(res)

torch.distributed.autograd.backward([loss])[BETA] RPC - ASYNCHRONOUS USER FUNCTIONS

비동기적인 것을 제공한다는 것 같은데.... 잘 모르겠다.

@rpc.functions.async_execution

def async_add_chained(to, x, y, z):

return rpc.rpc_async(to, torch.add, args=(x, y)).then(

lambda fut: fut.wait() + z

)

ret = rpc.rpc_sync(

"worker1",

async_add_chained,

args=("worker2", torch.ones(2), 1, 1)

)

print(ret) # prints tensor([3., 3.])

Updated Domain Libraries

TORCHVISION 0.7

Release notes (Link)

TORCHAUDIO 0.6

Release notes (Link)

https://pytorch.org/blog/pytorch-1.6-released/

PyTorch

An open source deep learning platform that provides a seamless path from research prototyping to production deployment.

pytorch.org

파이토치(PyTorch) 튜토리얼에 오신 것을 환영합니다 — PyTorch Tutorials 1.6.0 documentation

Shortcuts

tutorials.pytorch.kr

'분석 Python > Pytorch' 카테고리의 다른 글

| [Pytorch] Pytorch를 Keras처럼 API 호출 하는 방식으로 사용하는 방법 (0) | 2020.08.25 |

|---|---|

| [Pytorch] LSTM AutoEncoder for Anomaly Detection (3) | 2020.08.23 |

| [Pytorch] MixtureSameFamily 을 사용해서 bimodal distribution 만들기 (0) | 2020.05.05 |

| [Pytorch] 1.5.0 버전 설치하기 (0) | 2020.05.05 |

| PyTorch에서 SHAP을 사용하여 모델 해석하기 (정형 데이터) (7) | 2020.03.14 |