728x90

분석을 하다 보면 여러 Metric Plot을 그려야 하는 경우가 많다.

그래서 R에서는 제공하는게 많지만, 은근히 파이썬에서는 사람이 그려야 하는 게 많았다.

이번에는 ScikitPlot 라는 패키지를 소개하려고 한다. 여기서는 여러 가지 Plot을 제시해준다.

나중에 쓸 수도 있으니 간단한 사용법을 미리 정리!

## Multiclass

- ROC CURVE

- Confusion Matrx Plot

- silhouette

- precision_recall

## Binary

- lift_curve

- cumulative_gain

- ks_statistic

- calibration_curve

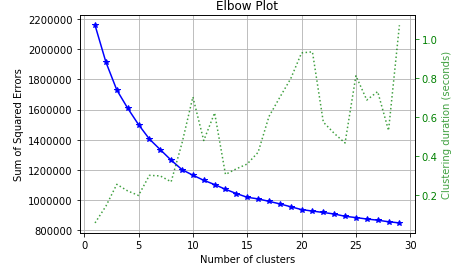

## Kmeans

- elbow curve (optimal cluster)

## PCA

- pca component variance

import matplotlib.pyplot as plt

import scikitplot as skplt

from sklearn.datasets import load_digits

from sklearn.ensemble import RandomForestClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.naive_bayes import GaussianNB

from sklearn.svm import LinearSVC

from sklearn.cluster import KMeans

from sklearn.decomposition import PCA

import scikitplot as skplt## ROC Curve

X, y = load_digits(return_X_y=True)

random_forest_clf = RandomForestClassifier(n_estimators=5, max_depth=5, random_state=1)

rf = random_forest_clf.fit(X, y)

y_probas = rf.predict_proba(X)

skplt.metrics.plot_roc(y, y_probas)

plt.show()

## ConfusionMatrix

predictions = rf.predict(X)

skplt.metrics.plot_confusion_matrix(y, predictions,

normalize=True)

plt.show()

## silhouette

skplt.metrics.plot_silhouette(X, predictions)

plt.show()## Precision Recall

skplt.metrics.plot_precision_recall(y, y_probas)

plt.show()

Binary Class

zero_one_index = [True if i in [0,1] else False for i in y ]

X_1 , Y_1 = X[zero_one_index] , y[zero_one_index]## KS Cruve

rf = random_forest_clf.fit(X_1 , Y_1)

y_probas = rf.predict_proba(X_1)

skplt.metrics.plot_ks_statistic(Y_1, y_probas)

plt.show()

## Cumulative GAIN

skplt.metrics.plot_cumulative_gain(Y_1, y_probas)

plt.show()

## Lift Curve

skplt.metrics.plot_lift_curve(Y_1, y_probas)

plt.show()

## calibration_curve

rf = RandomForestClassifier()

lr = LogisticRegression()

nb = GaussianNB()

svm = LinearSVC()

rf_probas = rf.fit(X_1, Y_1).predict_proba(X_1)

lr_probas = lr.fit(X_1, Y_1).predict_proba(X_1)

nb_probas = nb.fit(X_1, Y_1).predict_proba(X_1)

svm_scores = svm.fit(X_1, Y_1).decision_function(X_1)

probas_list = [rf_probas, lr_probas, nb_probas, svm_scores]

clf_names = ['Random Forest', 'Logistic Regression',

'Gaussian Naive Bayes', 'Support Vector Machine']

skplt.metrics.plot_calibration_curve(Y_1,

probas_list,

clf_names)

plt.show()

## elbow_curve

kmeans= KMeans(random_state=1)

skplt.cluster.plot_elbow_curve(kmeans, X,

cluster_ranges=range(1, 30))

plt.show()

## PCA Component Variance

pca = PCA(random_state=1)

pca.fit(X)

skplt.decomposition.plot_pca_component_variance(pca)

plt.show()

- 끝 -

https://scikit-plot.readthedocs.io/en/stable/cluster.html

Clusterer Module (API Reference) — Scikit-plot documentation

ax (matplotlib.axes.Axes)

scikit-plot.readthedocs.io

'분석 Python > Scikit Learn (싸이킷런)' 카테고리의 다른 글

| [ Python ] class weight 쉽게 구하는 법 (0) | 2019.11.24 |

|---|---|

| [ Python ] Cross Validation 병렬로 돌리고 BoxPlot 시각화 (0) | 2019.11.07 |

| [ Python ]sklearn Pipeline으로 전처리하고 dict에 저장 후 재사용 (0) | 2019.08.06 |

| sklearn Pipeline을 이용해 다양한 Regression모델 모델링하기 (0) | 2019.06.15 |

| sklearn Pipeline을 이용해 다양한 Classification모델들 모델링하기 (0) | 2019.06.15 |