728x90

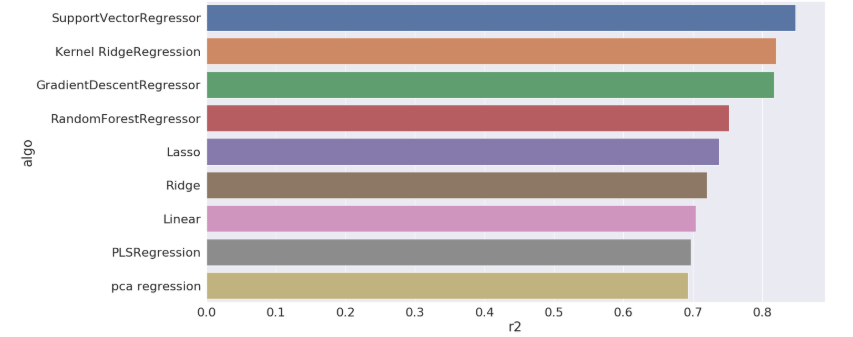

이번에는 여러 가지 Regression 모델을 비교하는 모델을 코드를 만들어봤다.

Pipeline을 쓸 기회가 없어서 잘 몰랐는데, 참 편리한 것 같다!

from sklearn.linear_model import LinearRegression, Ridge, Lasso, ElasticNet

from sklearn.linear_model import LassoCV , ElasticNetCV , RidgeCV

from sklearn.preprocessing import PolynomialFeatures

from sklearn.decomposition import PCA

from sklearn.metrics import mean_squared_error as mse

from sklearn.metrics import r2_score as r2

from sklearn.model_selection import cross_val_score

from sklearn.ensemble import GradientBoostingRegressor as GBR

from sklearn.ensemble import RandomForestRegressor as RFR

from sklearn.cross_decomposition import PLSRegression as PLS

from sklearn.svm import SVR

from sklearn.kernel_ridge import KernelRidge

boston = datasets.load_boston()

X_train, X_test, y_train, y_test = train_test_split(

boston.data, boston.target, test_size=0.4, random_state=0)

cv = ShuffleSplit(n_splits=5 , test_size=0.3, random_state=42)

pipe_linear = Pipeline([

('scl', StandardScaler()),

('poly', PolynomialFeatures()),

('fit', LinearRegression())])

pipe_lasso = Pipeline([

('scl', StandardScaler()),

('poly', PolynomialFeatures()),

('fit', Lasso(random_state = 42))])

pipe_ridge = Pipeline([

('scl', StandardScaler()),

('poly', PolynomialFeatures()),

('fit', Ridge(random_state = 42))])

pipe_pca = Pipeline([

('scl', StandardScaler()),

('pca', PCA()),

('fit', Ridge(random_state = 42))])

pipe_pls = Pipeline([

('scl', StandardScaler()),

('fit', PLS())])

pipe_gbr = Pipeline([

('scl', StandardScaler()),

('fit', GBR())])

pipe_rfr = Pipeline([

('scl', StandardScaler()),

('fit', RFR())])

pipe_svr = Pipeline([

('scl', StandardScaler()),

('fit', SVR())])

pipe_KR = Pipeline([

('scl', StandardScaler()),

('fit', KernelRidge())])

###

grid_params_linear = [{

"poly__degree" : np.arange(1,3),

"fit__fit_intercept" : [True, False],

}]

grid_params_lasso = [{

"poly__degree" : np.arange(1,3),

"fit__tol" : np.logspace(-5,0,10) ,

"fit__alpha" : np.logspace(-5,1,10) ,

}]

grid_params_pca = [{

"pca__n_components" : np.arange(2,8)

}]

grid_params_ridge = [{

"poly__degree" : np.arange(1,3),

"fit__alpha" : np.linspace(2,5,10) ,

"fit__solver" : [ "cholesky","lsqr","sparse_cg"] ,

"fit__tol" : np.logspace(-5,0,10) ,

}]

grid_params_pls = [{

"fit__n_components" : np.arange(2,8)

}]

min_samples_split_range = [0.5, 0.7 , 0.9]

grid_params_gbr =[{

"fit__max_features" : ["sqrt","log2"] ,

"fit__loss" : ["ls","lad","huber","quantile"] ,

"fit__max_depth" : [5,6,7,8] ,

"fit__min_samples_split" : min_samples_split_range ,

}]

grid_params_rfr =[{

"fit__max_features" : ["sqrt","log2"] ,

"fit__max_depth" : [5,6,7,8] ,

"fit__min_samples_split" : min_samples_split_range ,

}]

grid_params_svr =[{

"fit__kernel" : ["rbf", "linear"] ,

"fit__degree" : [2, 3, 5] ,

"fit__gamma" : np.logspace(-5,1,10) ,

}]

grid_params_KR =[{

"fit__kernel" : ["rbf","linear"] ,

"fit__gamma" : np.logspace(-5,1,10) ,

}]

pipe = [

pipe_linear , pipe_lasso , pipe_pca ,

pipe_ridge , pipe_pls , pipe_gbr ,

pipe_rfr , pipe_svr , pipe_KR

]

params = [

grid_params_linear , grid_params_lasso , grid_params_pca,

grid_params_ridge , grid_params_pls , grid_params_gbr ,

grid_params_rfr , grid_params_svr , grid_params_KR

]

jobs = 20

grid_dict = {

0: 'Linear',

1: 'Lasso',

2: 'pca regression' ,

3: 'Ridge' ,

4: 'PLSRegression',

5: "GradientDescentRegressor" ,

6: "RandomForestRegressor" ,

7: "SupportVectorRegressor" ,

8: "Kernel RidgeRegression"

}

model_mse = {}

model_r2 = {}

model_best_params = {}

for idx , (param , model) in enumerate(zip(params , pipe)) :

search = GridSearchCV(model, param, iid=True , scoring = "neg_mean_squared_error" ,

cv=cv , n_jobs=jobs , verbose=-1 )

search.fit(X_train , y_train)

y_pred = search.predict(X_test)

model_mse[grid_dict.get(idx)] = mse(y_test, y_pred)

model_r2[grid_dict.get(idx)] = r2(y_test, y_pred)

model_best_params[grid_dict.get(idx)] = search.best_params_

print("finish")

fig ,ax = plt.subplots(figsize=(20, 10))

sns.set(font_scale = 2)

output = pd.DataFrame([model_r2.keys() , model_r2.values()], index = ["algo","r2"]).T

output.sort_values(["r2"], ascending= False ,inplace=True)

ax = sns.barplot(y="algo", x="r2", data=output)

plt.show()

model_mse , model_r2

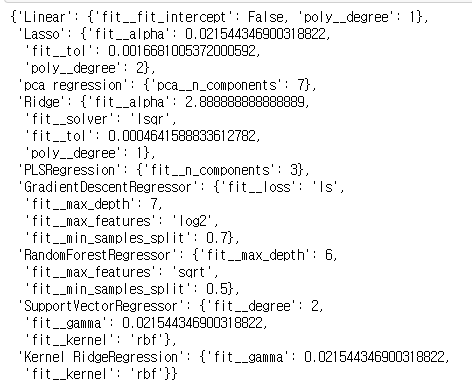

model_best_params

https://data-newbie.tistory.com/185

sklearn Pipeline을 이용해 다양한 Classification 모델 만들기

sklearn을 사용해서 sklearn에서 제공하는 다양한 분류 모델들을 비교하는 코드를 만들려고 한다. 원래는 한 Pipeline에 다 하려고 했는데, 먼가 자꾸 꼬여서 그냥 여러 개를 만드는 방향으로 바꿨다. from sklear..

data-newbie.tistory.com

sungreong/TIL

Today I Learned. Contribute to sungreong/TIL development by creating an account on GitHub.

github.com

'분석 Python > Scikit Learn (싸이킷런)' 카테고리의 다른 글

| [ Python ] scikitplot 다양한 metric plot 제공 (0) | 2019.10.19 |

|---|---|

| [ Python ]sklearn Pipeline으로 전처리하고 dict에 저장 후 재사용 (0) | 2019.08.06 |

| sklearn Pipeline을 이용해 다양한 Classification모델들 모델링하기 (0) | 2019.06.15 |

| Sklearn SVM + OneVsRestClassifer Gridsearch (0) | 2019.06.15 |

| [ Python ] Scikit-Learn, Numeric 표준화 / Category Onehot 하는 Pipeline 및 모델링하는 코드 (0) | 2019.06.15 |