광고 한 번만 눌러주세요! 블로그 운영에 큰 힘이 됩니다 ( Click my ADs! )

이번 글에서는 전체 모델링을 하고 나서 모델 해석을 위해 eli5 , shap을 사용하려고 한다.

핵심 포인트는 Pipeline과 Shap , Eli5를 보시면 될 것 같다.

모델 해석으로는 lime, shap, eli5가 있는데, 다 좋지만 개인적으로 shap가 선호하므로, 좀 더 잘 알기 위해서 추후에 정리해보려고 한다.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import eli5

import shap

import seaborn as sns

plt.style.use('ggplot')

# load the dataset

from sklearn.base import BaseEstimator

from sklearn.base import TransformerMixin

from sklearn.preprocessing import StandardScaler , RobustScaler

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import FunctionTransformer

from sklearn.pipeline import Pipeline

from sklearn.pipeline import FeatureUnion

from sklearn.model_selection import train_test_split

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import KFold

from sklearn.model_selection import StratifiedKFold

from sklearn.model_selection import RandomizedSearchCV

from sklearn.metrics import make_scorer

from sklearn.metrics import accuracy_score

from sklearn.metrics import classification_report

from sklearn.metrics import confusion_matrix

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import BaggingClassifier

### data

income = pd.read_csv("./../Data/income_evaluation.csv")

newcol = [i.strip() for i in income.columns.tolist()]

income.columns = newcol### PipeLine Class 만들기 ###

# Custom Transformer that extracts columns passed as argument

class FeatureSelector(BaseEstimator, TransformerMixin):

#Class Constructor

def __init__(self, feature_names):

self.feature_names = feature_names

#Return self nothing else to do here

def fit(self, X, y = None):

return self

#Method that describes what we need this transformer to do

def transform(self, X, y = None):

return X[self.feature_names]

class MissingTransformer( BaseEstimator, TransformerMixin ):

def __init__(self, MissingImputer):

self.MissingImputer = MissingImputer

#Return self nothing else to do here

def fit( self, X, y = None ):

return self

def transform(self, X , y = None ):

cols = X.columns.tolist()

df = X.copy()

result = self.MissingImputer.fit_transform(df)

result = pd.DataFrame(result , columns = cols )

return result

# converts certain features to categorical

class NumericalTransformer( BaseEstimator, TransformerMixin ):

#Class constructor method that takes a boolean as its argument

def __init__(self, new_features=True):

self.new_features = new_features

#Return self nothing else to do here

def fit( self, X, y = None ):

return self

#Transformer method we wrote for this transformer

def transform(self, X , y = None ):

df = X.copy()

# convert columns to numerical

columns =df.columns.to_list()

for name in columns :

if name == "age" :

value = RobustScaler().fit_transform(df[name].values.reshape(-1,1))

else :

value = StandardScaler().fit_transform(df[name].values.reshape(-1,1))

df[name] = value

# returns numpy array

return df

# converts certain features to categorical

class CategoricalTransformer( BaseEstimator, TransformerMixin ):

#Class constructor method that takes a boolean as its argument

def __init__(self, new_features=True):

self.new_features = new_features

#Return self nothing else to do here

def fit( self, X, y = None ):

return self

#Transformer method we wrote for this transformer

def transform(self, X , y = None ):

df = X.copy()

if self.new_features:

# Treat ? workclass as unknown

df['workclass']= df['workclass'].replace('?','Unknown')

# Two many category level, convert just US and Non-US

df.loc[df['native-country']!=' United-States','native-country'] = 'non_usa'

df.loc[df['native-country']==' United-States','native-country'] = 'usa'

# convert columns to categorical

columns =df.columns.to_list()

for name in columns :

col = pd.Categorical(df[name])

df[name] = col.codes

# returns numpy array

return df### Pipeline 구축하기 ###

# get the categorical feature names

categorical_features = X.select_dtypes("object").columns.to_list()

# get the numerical feature names

numerical_features = X.select_dtypes("float").columns.to_list()

# create the steps for the categorical pipeline

categorical_steps = [

('cat_selector', FeatureSelector(categorical_features)),

('imputer', MissingTransformer(SimpleImputer(strategy='constant',

missing_values=np.nan,

fill_value='missing'))),

('cat_transformer', CategoricalTransformer())

]

# create the steps for the numerical pipeline

numerical_steps = [

('num_selector', FeatureSelector(numerical_features)),

('imputer', MissingTransformer(SimpleImputer(strategy='median'))),

('std_scaler', NumericalTransformer()),

]

# create the 2 pipelines with the respective steps

categorical_pipeline = Pipeline(categorical_steps)

numerical_pipeline = Pipeline(numerical_steps)

pipeline_list = [

('categorical_pipeline', categorical_pipeline),

('numerical_pipeline', numerical_pipeline)

]

# Combining the 2 pieplines horizontally into one full pipeline

preprocessing_pipeline =FeatureUnion(transformer_list=pipeline_list)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.20,

random_state=seed, shuffle=True, stratify=y)### PipeLine + RandomForest + RandomSearchCV ###

# we pass the preprocessing pipeline as a step to the full pipeline

full_pipeline_steps = [

('preprocessing_pipeline', preprocessing_pipeline),

('model', RandomForestClassifier(random_state=seed))

]

# create the full pipeline object

full_pipeline = Pipeline(steps=full_pipeline_steps)

# Create the grid search parameter grid and scoring funcitons

param_grid = {

"model": [RandomForestClassifier(random_state=seed)],

"model__max_depth": np.linspace(1, 32, 32),

"model__n_estimators": np.arange(100, 1000, 100),

"model__criterion": ["gini","entropy"],

"model__max_leaf_nodes": [16, 64, 128, 256],

"model__oob_score": [True],

}

scoring = {

'AUC': 'roc_auc',

'Accuracy': make_scorer(accuracy_score)

}

# create the Kfold object

num_folds = 3

kfold = StratifiedKFold(n_splits=num_folds, random_state=seed)

# create the grid search object with the full pipeline as estimator

n_iter=3

grid = RandomizedSearchCV(

estimator=full_pipeline,

param_distributions=param_grid,

cv=kfold,

scoring=scoring,

n_jobs=1,

n_iter=n_iter,

refit="AUC"

)

# fit grid search

best_rf = grid.fit(X_train,y_train)print(f'Best score: {best_rf.best_score_}')

print(f'Best model: {best_rf.best_params_}')

pred_test = best_rf.predict(X_test)

pred_train = best_rf.predict(X_train)

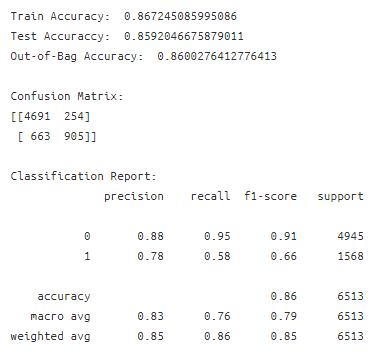

print('Train Accuracy: ', accuracy_score(y_train, pred_train))

print('Test Accuraccy: ', accuracy_score(y_test, pred_test))

print("Out-of-Bag Accuracy: ", best_rf.best_params_['model'].oob_score_)

print('\nConfusion Matrix:')

print(confusion_matrix(y_test,pred_test))

print('\nClassification Report:')

print(classification_report(y_test,pred_test))

# lets get the random forest model configuration and feature names

rf_model = best_rf.best_params_['model']

features = np.array(X_train.columns)

new_X_test = preprocessing_pipeline.fit_transform(X_test)

new_X_test = pd.DataFrame(new_X_test, columns=features)

### Feature Importance Plot ###

# get the predicitons from the random forest object

y_pred = rf_model.predict(new_X_test)

# get the feature importances

importances = rf_model.feature_importances_

# sort the indexes

sorted_index = np.argsort(importances)

sorted_importances = importances[sorted_index]

sorted_features = features[sorted_index]

# plot the explained variance using a barplot

fig, ax = plt.subplots()

ax.barh(sorted_features , sorted_importances)

ax.set_xlabel('Importances')

ax.set_ylabel('Features')

### eli5 ###

eli5에 대해서 좀 알아보니 catboost까지 지원을 한다! keras도 지원하는데 이미지 관련된 것만 가능하고

lime도 되는데, lime은 text만 가능하다. 한번 처음 써봤는데 굉장히 직관적이고 좋은 것 같다.

rf_model = best_rf.best_params_['model']

features = np.array(X_train.columns)

eli5.show_weights(rf_model, feature_names=features)

eli5.show_prediction(rf_model , new_X_test.sample(1))

eli5.explain_prediction_df(rf_model , new_X_test.iloc[0])

from eli5.permutation_importance import get_score_importances

from sklearn.metrics import accuracy_score

def score(X, y):

y_pred = rf_model.predict(X)

return accuracy_score(y, y_pred)

base_score , score_decreas = get_score_importances(score , new_X_test.values ,

y_test , n_iter =10 )

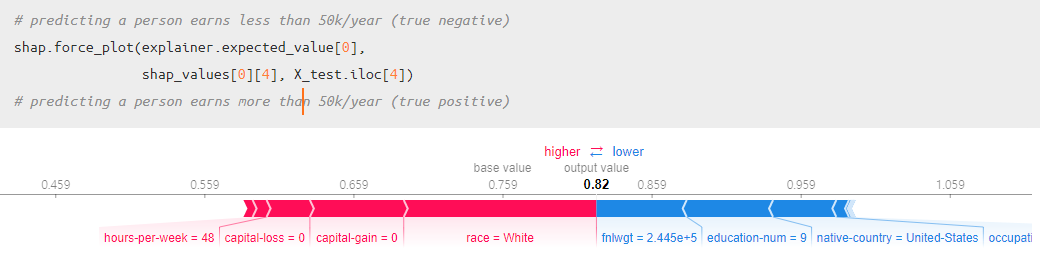

### shap ###

많은 함수를 제공해서 사용하기에는 좋은 것 같다. 아래 글에 참조한 블로그도 있으니 참고하면 될 것 같다!

import shap

shap.initjs()

# Create the explainer object

explainer = shap.TreeExplainer(rf_model)

print('Expected Value:', explainer.expected_value)

# get the shap values from the explainer

shap_values = explainer.shap_values(new_X_test)force_plot

참고하시면 될 것 같다 (https://data-newbie.tistory.com/254)

decision_plot

dependence_plot

embedding_plot

결론

하다 보니, 본래에서 하려고 하던 것보다 eli5와 shap에 대해서 함수 찾는 과정이 재미있어서 보게 되었다.

아직 해석하는 방법에 대해서는 추후에 알아보기로 하고 관심 있는 것을 사용하면 될 것 같다.

암튼 pipleline을 이용해서 전처리도 모델링까지 한번에 구축할 수 있다는 큰 장점이 있고, scikit-learn 같은 경우 모델 해석하는 라이브러리와 붙여서 쓸 수 있어서 참 좋은 것 같다.

scikit-learn은 보면 볼수록 엄청나게 기능이 많은 패키지라는 것을 다시 느끼게 된다...ㄷㄷ

https://excelsior-cjh.tistory.com/166

앙상블 학습 및 랜덤 포레스트

앙상블 학습과 랜덤 포레스트 이번 포스팅은 '핸즈온 머신러닝 ' 교재로 공부하면서 정리하여 작성하였습니다. 앙상블(ensemble)이란 프랑스어이며 뜻은 조화 또는 통일을 의미한다. 머신러닝에서 여러개의 모델을..

excelsior-cjh.tistory.com

Ensemble Learning case study: Model Interpretability

This is the first of a two-part article where we will be exploring the 1994 census income dataset, which contains information such as age…

towardsdatascience.com

https://slundberg.github.io/shap/notebooks/plots/dependence_plot.html

dependence_plot

Documentation by example for shap.dependence_plot¶ This notebook is designed to demonstrate (and so document) how to use the shap.dependence_plot function. It uses an XGBoost model trained on the classic UCI adult income dataset (which is classification ta

slundberg.github.io

https://slundberg.github.io/shap/notebooks/plots/decision_plot.html

decision_plot

Upon further inspection of the predictions, we found a threshold around \$4,300, but there are anomalies. Capital gains of \\$0, \$3,000, and \\$3,100 contribute to unexpectedly high predictions; capital gains of \$5,000 contributes to unexpectedly low pre

slundberg.github.io

'분석 Python > Scikit Learn (싸이킷런)' 카테고리의 다른 글

| [ Python ] sklearn_pandas 로 정형데이터 전처리하기(Preprocessing) (0) | 2020.01.19 |

|---|---|

| LabelEncoder new class 대처하기 (0) | 2020.01.04 |

| [ Python ] scikit-learn RandomTreesEmbedding (0) | 2019.12.21 |

| [ Python ] scikit-learn feature selection Tool (2) | 2019.12.21 |

| [ Python ] class weight 쉽게 구하는 법 (0) | 2019.11.24 |